AWS Network Load Balancer (NLB) on Terraform

This week, I learned about AWS Network Load Balancer. I had been using Application Load Balancer (ALB) in my previous projects, but now I wanted to understand when and why to use Network Load Balancer instead.

The difference is smaller than I thought! I took my Auto Scaling project and just swapped the ALB with NLB. Most of my infrastructure stayed exactly the same.

What’s Different from ALB Project?

What stayed exactly the same (99% of the project):

- VPC configuration

- Auto Scaling Group with Launch Template

- Target Tracking policy (CPU-based)

- Scheduled scaling (7 AM and 5 PM)

- SNS notifications

- Security groups

- Bastion host

- Route53 DNS

- ACM certificate

- All the same files (c1 through c9, c11, c12, c13-01 to c13-05, c13-07)

What’s different (only 1% changed):

- Load Balancer type: NLB instead of ALB

- Listeners: TCP and TLS instead of HTTP and HTTPS

- Removed: ALB request count scaling policy

- Only 2 files changed!

This surprised me: Changing from ALB to NLB was much easier than I expected. I thought I’d need to rewrite a lot of code, but actually only 2 files changed!

Why Use Network Load Balancer?

Before I explain the code changes, let me tell you when to use NLB vs ALB.

Application Load Balancer (ALB) – Layer 7

Good for:

- Web applications with HTTP/HTTPS

- Path-based routing (

/app1,/app2,/api) - Host-based routing (multiple domains)

- Looking at HTTP headers

- WebSocket applications

- Lambda functions as targets

Example uses:

- E-commerce website with

/products,/cart,/checkout - API Gateway with different paths

- Microservices architecture

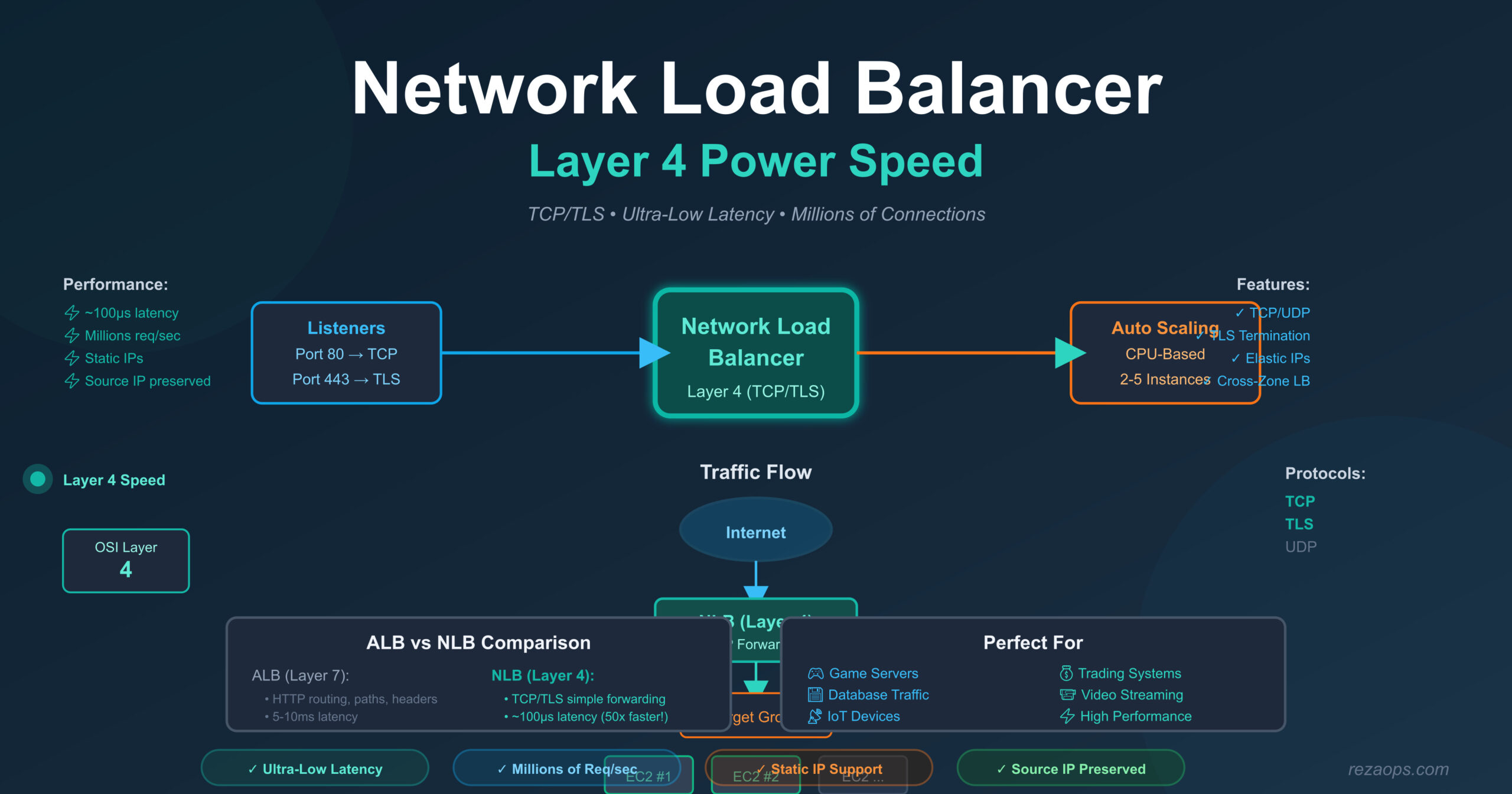

Network Load Balancer (NLB) – Layer 4

Good for:

- Ultra-high performance (millions of requests per second)

- Very low latency (microseconds vs milliseconds)

- Static IP addresses (elastic IPs)

- TCP and UDP traffic

- Non-HTTP protocols (databases, game servers, IoT)

- Extreme performance requirements

Example uses:

- Game servers (need very low latency)

- Database connections (TCP traffic)

- IoT devices sending data

- Financial trading systems (speed critical)

- Video streaming servers

- VoIP applications

Simple Decision Guide

Use ALB when:

- Your app is HTTP/HTTPS ✓

- You need smart routing (paths, headers) ✓

- You want to see application-level metrics ✓

- Latency of 5-10ms is okay ✓

Use NLB when:

- You need extreme performance ✓

- You need static IP addresses ✓

- You’re using TCP/UDP (not HTTP) ✓

- Every millisecond matters ✓

- You have millions of requests per second ✓

My project: I’m using NLB even though my app is HTTP, just to learn how it works. In real life, ALB would be better for my simple web application.

The Two Files That Changed

Let me show you exactly what changed.

Change 1: The Load Balancer (c10-02)

This is the biggest change, but it’s actually quite simple.

Old ALB Code:

hcl

# Application Load Balancer

module "alb" {

source = "terraform-aws-modules/alb/aws"

version = "9.11.0"

name = "${local.name}-alb"

load_balancer_type = "application" # ← Layer 7

vpc_id = module.vpc.vpc_id

subnets = module.vpc.public_subnets

security_groups = [module.loadbalancer_sg.security_group_id]

listeners = {

# HTTP to HTTPS redirect

my-http-https-redirect = {

port = 80

protocol = "HTTP"

redirect = {

port = "443"

protocol = "HTTPS"

status_code = "HTTP_301"

}

}

# HTTPS listener with path routing

my-https-listener = {

port = 443

protocol = "HTTPS"

ssl_policy = "ELBSecurityPolicy-TLS13-1-2-Res-2021-06"

certificate_arn = module.acm.acm_certificate_arn

fixed_response = {

content_type = "text/plain"

message_body = "Fixed Static message - for Root Context"

status_code = "200"

}

rules = {

myapp1-rule = {

actions = [{

type = "weighted-forward"

target_groups = [{ target_group_key = "mytg1", weight = 1 }]

stickiness = { enabled = true, duration = 3600 }

}]

conditions = [{

path_pattern = { values = ["/*"] }

}]

}

}

}

}

}New NLB Code:

hcl

# Network Load Balancer

module "nlb" {

source = "terraform-aws-modules/alb/aws" # Same module!

version = "9.11.0"

name = "mynlb"

load_balancer_type = "network" # ← Layer 4 (main change!)

vpc_id = module.vpc.vpc_id

subnets = module.vpc.public_subnets

dns_record_client_routing_policy = "availability_zone_affinity"

security_groups = [module.loadbalancer_sg.security_group_id]

listeners = {

# TCP Listener (no redirect, just forward)

my-tcp = {

port = 80

protocol = "TCP" # ← Not HTTP!

forward = {

target_group_key = "mytg1"

}

}

# TLS Listener (encrypted TCP)

my-tls = {

port = 443

protocol = "TLS" # ← Not HTTPS!

certificate_arn = module.acm.acm_certificate_arn

forward = {

target_group_key = "mytg1"

}

}

}

}What changed – explained line by line:

- Module name:

module "alb"→module "nlb"- Just renamed for clarity

- Load balancer name:

"${local.name}-alb"→"mynlb"- Simpler name

- Load balancer type:

"application"→"network"- This is the main change! Switches from Layer 7 to Layer 4

- Added DNS policy:

dns_record_client_routing_policy = "availability_zone_affinity"- NLB-specific setting

- Routes clients to nearest availability zone

- Reduces latency

- TCP Listener (port 80):

- Protocol:

"HTTP"→"TCP" - No redirect: NLB doesn’t understand HTTP, so can’t redirect

- Simple forward: Just sends traffic to target group

- No

redirectblock, nofixed_response, norules

- Protocol:

- TLS Listener (port 443):

- Protocol:

"HTTPS"→"TLS" - TLS is encrypted TCP (like HTTPS is encrypted HTTP)

- No SSL policy: NLB doesn’t need this

- No rules, no fixed response: NLB just forwards traffic

- Certificate is used for encryption, but no HTTP-level features

- Protocol:

What was removed:

- HTTP to HTTPS redirect (NLB can’t do this)

- Fixed response message

- Path-based routing rules

- Stickiness configuration

- SSL policy

Why removed? Because NLB operates at Layer 4 (TCP), it doesn’t understand Layer 7 (HTTP) concepts like:

- URLs and paths

- HTTP methods (GET, POST)

- HTTP headers

- HTTP redirects

- HTTP status codes

Change 2: Scaling Policies (c13-06)

Old code (with ALB):

hcl

# Policy 1: CPU-based scaling

resource "aws_autoscaling_policy" "avg_cpu_policy_greater_than_xx" {

name = "avg-cpu-policy-greater-than-xx"

policy_type = "TargetTrackingScaling"

autoscaling_group_name = aws_autoscaling_group.my_asg.id

estimated_instance_warmup = 180

target_tracking_configuration {

predefined_metric_specification {

predefined_metric_type = "ASGAverageCPUUtilization"

}

target_value = 50.0

}

}

# Policy 2: ALB request count scaling

resource "aws_autoscaling_policy" "alb_target_requests_greater_than_yy" {

name = "alb-target-requests-greater-than-yy"

policy_type = "TargetTrackingScaling"

autoscaling_group_name = aws_autoscaling_group.my_asg.id

estimated_instance_warmup = 120

target_tracking_configuration {

predefined_metric_specification {

predefined_metric_type = "ALBRequestCountPerTarget"

resource_label = "${module.alb.arn_suffix}/${module.alb.target_groups["mytg1"].arn_suffix}"

}

target_value = 10.0

}

}New code (with NLB):

hcl

# Policy 1: CPU-based scaling (same as before!)

resource "aws_autoscaling_policy" "avg_cpu_policy_greater_than_xx" {

name = "avg-cpu-policy-greater-than-xx"

policy_type = "TargetTrackingScaling"

autoscaling_group_name = aws_autoscaling_group.my_asg.id

estimated_instance_warmup = 180

target_tracking_configuration {

predefined_metric_specification {

predefined_metric_type = "ASGAverageCPUUtilization"

}

target_value = 50.0

}

}

# Policy 2: REMOVED - ALB request count doesn't exist for NLBWhat changed:

- Kept: CPU-based scaling (this works with any load balancer)

- Removed: ALB request count scaling

- Removed: Output for resource_label

Why removed the second policy?

The metric ALBRequestCountPerTarget is specific to Application Load Balancer. This metric counts HTTP requests.

Network Load Balancer doesn’t have this metric because:

- NLB doesn’t understand HTTP requests

- NLB works at TCP level (lower than HTTP)

- NLB only counts TCP connections, not HTTP requests

What metrics DOES NLB have?

- Active TCP connections

- New TCP connections

- Processed bytes

- Healthy/unhealthy hosts

But there’s no built-in “request count per target” for NLB, so I removed that scaling policy.

Could I add a different NLB scaling policy?

Yes! I could scale based on TCP connections:

hcl

# Example NLB scaling policy (not in my code)

resource "aws_autoscaling_policy" "nlb_active_connections" {

name = "nlb-active-connections-scaling"

policy_type = "TargetTrackingScaling"

autoscaling_group_name = aws_autoscaling_group.my_asg.id

estimated_instance_warmup = 120

target_tracking_configuration {

customized_metric_specification {

metric_name = "ActiveConnectionCount"

namespace = "AWS/NetworkELB"

statistic = "Sum"

}

target_value = 1000.0

}

}

```

But I didn't add this because:

1. I wanted to keep it simple

2. CPU-based scaling is usually enough

3. Scheduled scaling handles predictable traffic

## How Everything Works with NLB

Let me explain the complete flow:

### Normal Traffic Flow

```

User's browser → DNS (nlb.rezaops.com)

↓

Network Load Balancer (Layer 4)

↓ (TCP/TLS connection)

Target Group (mytg1)

↓

Auto Scaling Group (2-5 instances)

↓

EC2 Instance running Apache HTTP serverKey differences from ALB:

- NLB doesn’t look at HTTP:

- ALB: “Oh, this is a GET request to /app1/index.html”

- NLB: “This is TCP traffic on port 80, forwarding…”

- NLB is faster:

- ALB: ~5-10ms latency (needs to read HTTP)

- NLB: ~100 microseconds latency (just forwards TCP)

- NLB preserves source IP:

- My EC2 instances see the real client IP

- With ALB, instances see ALB’s IP

- NLB can have static IPs:

- I can assign Elastic IPs to NLB

- Useful for whitelisting in firewalls

- ALB can’t do this

Scaling Behavior

Same as ALB project:

- 2-5 instances

- Scales when CPU > 50%

- Scheduled scaling at 7 AM and 5 PM

Different from ALB project:

- No request count scaling

- Can’t scale based on HTTP metrics

Testing the Setup

After deploying, I tested both TCP and TLS:

Test 1: TCP (port 80)

bash

curl http://nlb.rezaops.com/app1/index.htmlResult: Works! Page loads.

Test 2: TLS (port 443)

bash

curl https://nlb.rezaops.com/app1/index.htmlResult: Works! Encrypted connection.

Test 3: Check actual IP On my EC2 instance, I checked Apache logs:

bash

sudo tail -f /var/log/httpd/access_log

```

With NLB, I saw real client IPs!

```

203.0.113.42 - - [15/Oct/2025:10:23:15] "GET /app1/index.html HTTP/1.1" 200 1234With ALB, I would see ALB’s IP instead.

DNS Configuration

One small change in DNS:

ALB DNS:

hcl

resource "aws_route53_record" "apps_dns" {

zone_id = data.aws_route53_zone.mydomain.zone_id

name = "asg-lt.rezaops.com" # ALB domain

type = "A"

alias {

name = module.alb.dns_name

zone_id = module.alb.zone_id

evaluate_target_health = true

}

}NLB DNS:

hcl

resource "aws_route53_record" "apps_dns" {

zone_id = data.aws_route53_zone.mydomain.zone_id

name = "nlb.rezaops.com" # NLB domain

type = "A"

alias {

name = module.nlb.dns_name # Changed from alb to nlb

zone_id = module.nlb.zone_id

evaluate_target_health = true

}

}

```

Use NLB if:

- ✓ Non-HTTP protocols (database, game server, custom TCP)

- ✓ Need static IP addresses (Elastic IPs)

- ✓ Extreme performance requirements (microsecond latency)

- ✓ Need to preserve client source IP

- ✓ Millions of requests per second

Use ALB if:

- ✓ Standard web application (HTTP/HTTPS)

- ✓ Need content-based routing (paths, headers)

- ✓ Want HTTP to HTTPS redirect

- ✓ WebSocket applications

- ✓ Container-based applications

- ✓ Lambda function targets

My project: I used NLB for learning. In real production, I’d use ALB because my app is just a simple website.

Deployment Steps

If you want to try this:

bash

# 1. Clone my previous ALB project

cd Auto scaling-with-Launch-Templates/terraform-manifests

# 2. Change only 2 files:

# - c10-02-ALB-application-loadbalancer.tf (rename to c10-02-NLB...)

# - c13-06-autoscaling-ttsp.tf (remove ALB request policy)

# 3. Update c12-route53-dnsregistration.tf:

# Change name from "asg-lt" to "nlb"

# 4. Deploy

terraform init

terraform plan

terraform apply

# 5. Test

curl http://nlb.rezaops.com/app1/index.html

curl https://nlb.rezaops.com/app1/index.html

# 6. Clean up

terraform destroyTime to deploy: Same as ALB (~10-15 minutes)

Total lines changed: About 30-40 lines out of 500+ lines. That’s less than 10%!

Conclusion

Network Load Balancer is much simpler than I thought. The main differences are:

- Operates at Layer 4 (TCP) instead of Layer 7 (HTTP)

- No HTTP features (no redirects, no path routing, no fixed responses)

- Faster and more scalable (microsecond latency, millions of connections)

- Preserves source IP (see real client IPs)

- Can have static IPs (Elastic IPs)

For learning purposes, swapping ALB with NLB was easy – only 2 files changed!

For production, I would choose:

- ALB for my web applications (99% of cases)

- NLB only when I need extreme performance, static IPs, or non-HTTP protocols

My next step: Learn about Global Accelerator, which can sit in front of both ALB and NLB for even better performance across global regions!

Project Details:

- AWS Region: us-east-1

- Domain: nlb.rezaops.com

- Load Balancer Type: Network Load Balancer (Layer 4)

- Protocols: TCP (port 80), TLS (port 443)

- Auto Scaling: 2-5 instances based on CPU

- Infrastructure: Same as ALB project (VPC, Auto Scaling, SNS, etc.)

Important: This is a learning project. For a real website, ALB would be better because it has more features (routing, redirects, etc.). Use NLB only when you specifically need its advantages!